top of page

Xulun Luo

Research & Development

R&D at the intersection of virtual production, robotics, and computer vision—aimed at making capture pipelines reliable, time-synced, and reproducible. Current projects span robust sensor-fusion camera tracking and planner-driven UE/AirSim simulation, generating synchronized ground truth for novel-view and dynamic-scene research. The throughline: turn messy sensing and planning into creator-friendly, research-grade workflows.

UWB + IMU Fusion Camera Tracking (WLab Virtual Production, 3 months)

Motivation

-

Why Camera Tracking is Critical for Virtual Production

-

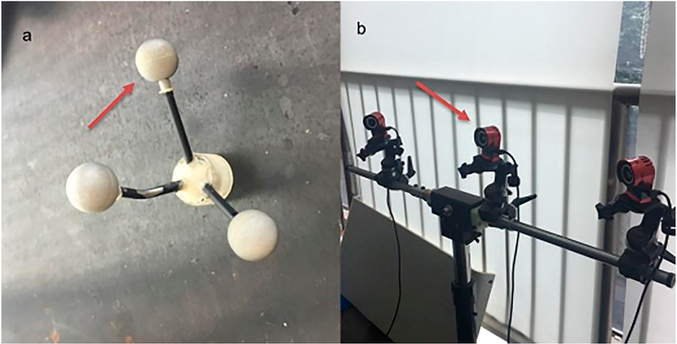

Virtual production relies on LED walls displaying real-time backgrounds that must perfectly match camera movement—any tracking error breaks the illusion. Currently, most productions use OptiTrack optical systems for this critical tracking, but its optical markers have inherent limitations.

-

-

Optical marker occlusion challenges:

-

Virtual production sets suffer when crew, rigs, and equipment block OptiTrack markers, causing tracking jitter and degraded pose solves—a radio-assisted fallback maintains continuity during these critical dropouts.

-

-

UWB's vertical limitation:

-

While UWB (Radio Frequency System) provides robust indoor ranging, deployment geometry sensitivity makes the Z-axis data commonly not reliable—pairing with IMU (Inertial Measurement Unit) sensors stabilizes vertical tracking while adding rich motion data (quaternions, gyroscope, accelerometer).

-

System / Pipeline

-

Hardware

-

UWB anchor network + on-camera tag: Radio-based positioning that works when optical markers are blocked.

-

Dual IMU modules: High-rate motion capture (orientation/acceleration) with Z-axis drift compensation.

-

Edge compute: Ingests sensor feeds and prepares data for streaming.

-

OptiTrack system: Ground-truth reference for validation and dataset alignment.

-

LTC generator: Master clock ensuring frame-accurate synchronization across all systems.

-

-

Software

-

UWB mode switching: Requests only needed data fields to reduce noise and bandwidth.

Byte-stream translation: Converts vendor formats to readable ASCII/JSON for debugging.

UWB ↔ IMU pairing: Fuses packets into single tracking record when both arrive within time window.

Streamed output: Maintains stable tracking stream for consistent recording/playback.

Global sync: Locks radio/IMU timeline to LTC/OptiTrack for frame-true capture.

Concurrency model: Handles serial and network I/O without blocking to maintain real-time rates.

-

Technical challenges & solutions (timeline)

-

Marker Occlusion → Need a Fallback

-

Challenge: OptiTrack loses tracking when markers are blocked. ❌

-

Solution: Prototyped UWB-assisted tracker to complement OptiTrack. ✅

-

Result: Short occlusions no longer break pose continuity on set.

-

-

UWB Protocol Discovery & Normalization

-

Challenge: Documentation didn't expose frame layouts; anchors report only ranges/relationships, not absolute pose. ❌

-

Solution: Reverse-engineered protocol through systematic analysis. ✅

-

Sent targeted read frames with different headers.

-

Logged differential responses.

-

Mapped bytes to corresponding fields.

-

Standardized outputs to ASCII/JSON.

-

Implemented on-demand mode switching for selective data retrieval.

-

-

-

Z-axis Instability on UWB Alone

-

Challenge: Even with 3D anchor layout, vertical noise and drift persisted. ❌

-

Solution: Introduced IMU for vertical stabilization. ✅

-

Primary IMU provides quaternion orientation, angular rates, linear acceleration.

-

Secondary IMU added for cross-validation to curb integration drift.

-

Approach consistent with published UWB+IMU fusion literature.

-

-

-

Edge Compute & Concurrency

-

Challenge: ESP32 in C required complex multi-threading, race-condition handling; slow iteration pace. ❌

-

Solution: Migrated to Raspberry Pi + Python. ✅

-

Leveraged Python threads for concurrent serial + UDP I/O.

-

I/O-bound workload minimizes GIL (Global Interpreter Lock) impact.

-

-

-

Cross-sensor Packet Pairing (UWB ↔ IMU)

-

Challenge: Module timestamps drifted; naive "match by time" proved unreliable. ❌

-

Solution: Built bounded time-window "catch" algorithm. ✅

-

Emits fused record only when both packets arrive within tuned window.

-

Drops partial packets to maintain data integrity.

-

Window parameters optimized through extensive lab testing.

-

Achieved optimal balance between completeness and correctness.

-

-

-

Parsing, Rate Control, Transport

-

Implementation: Successfully parsed vendor byte streams → human-readable ASCII/JSON. ✅

-

Enhanced with: Sequence IDs and validity flags for data integrity.

-

Delivered: Stable telemetry emission at 25 Hz via UDP with jitter buffer.

-

-

Global Synchronization for Capture/Training

-

Challenge: Required frame-true alignment of (UWB+IMU) with OptiTrack for dataset generation. ❌

-

Solution: Adopted LTC as master timing source. ✅

-

Implemented LTC "chase" script at 25 Hz.

-

Auto-retiming when drift exceeds threshold.

-

Mirrors standard film/TV synchronization practices.

-

Prepare to go for model training and data collection.

-

-

Outcomes

-

Stable 25 Hz telemetry:

-

Fused (UWB+IMU) data stream aligned to LTC/OptiTrack for clean Unreal Engine ingestion and model training.

-

-

Deterministic data integrity:

-

Bounded time-window "catch" ensures either complete, trustworthy fused records or none—no partial data.

-

-

Minimal temporal drift:

-

Average ~1 frame over 30-minute captures at 25 Hz using LTC "chase" retiming.

-

-

Production-ready resilience:

-

Practical continuity when optical tracking degrades, improving capture session reliability and enabling consistent pipeline for future optimization.

-

Demo

Tartan-Dynamic — 3D Reconstruction Benchmark in Dynamic Scenes with Novel Views (AirLab, 2.5 months)

Motivation

-

Interpolation ≠ Generalization:

-

Most benchmarks only test interpolation along filmed paths—typical "even/odd" or "every 8th frame" splits don't create true novel views, allowing models to succeed without proving extrapolation capability.

-

-

Low dynamic pressure:

-

Existing datasets often feature one/few moving objects with static-dominated viewports—methods assuming "mostly static" scenes appear strong but fail when motion fills the frame.

-

-

SLAM/SfM fragility under motion:

-

Pipelines relying on static-majority optical flow degrade or collapse when most pixels move or objects deform/occlude each other.

-

-

Too small for real-world applications:

-

Many datasets are room-sized or single street segments (≪ 1 km²) with limited angles/baselines, under-stressing large outdoor scenes and long-baseline occlusion handling.

-

-

Tooling gap for novel-view evaluation:

-

Coverage across indoor/outdoor × static/dynamic is inconsistent; truly "novel view" splits—especially outdoors with dynamics—are rare, with rendering often offline-only.

-

System / Pipeline

-

Simulation & Planning

-

UE4.27 + AirSim: Authors large outdoor scenes, spawns virtual drone, emits RGB/Depth/Seg/LiDAR for planning.

-

airsim_ros_pkgs: Bridges sim sensors into ROS topics for planners and recorders.

-

Topic relay: Maps AirSim LiDAR to auto avoidance planner's expected input format.

-

EGO-Planner v2: Builds local occupancy maps and optimizes smooth, collision-safe trajectories for drone auto-avoidance.

-

RViz: Advances dynamics layer and visualizes clouds/paths.

-

-

Capture & Evaluation

-

Mapping pass: Flies planner to write occupancy grid (Octo/voxel) bounding feasible camera space.

-

Trajectory graph: Samples collision-safe camera routes inside mapped volume.

-

Pose densification: Converts routes into time-indexed 6-DoF camera poses for rendering.

-

Novel-view selection: Uses covisibility test to keep poses whose rays intersect voxels seen before time t.

-

Synchronized output: Renders RGB/Depth/Seg with aligned poses for training/metrics.

-

Technical challenges & solutions (timeline)

-

Benchmark Gaps → Need True Novel Views

-

Challenge: Common train/val/test splits keep cameras on same paths. ❌

-

Solution: Generated new camera paths by sampling within seen-voxel envelope and filtering with covisibility map. ✅

-

Result: Test views are off-trajectory yet geometrically supported—forcing real extrapolation.

-

-

Too-Static Scenes → Raise Dynamic Load

-

Challenge: Prior sets under-represent frames where >50% of pixels move. ❌

-

Solution: Injected multiple dynamic actors with start/stop patterns and occlusion churn. ✅

-

Result: Stress-tests methods that assume static background or steady motion.

-

-

Planner Bring-Up at Scale

-

Challenge: Out-of-the-box topics/timers caused brittle launches. ❌

-

Solution: Aligned topic names (relay LiDAR to planner input), enforced finite timers, staged node startup. ✅

-

Result: Stable voxel updates and smooth B-splines; rapid iteration in RViz.

-

-

From Interpolation to Novel-View Metrics

-

Challenge: Interpolation PSNR/SSIM inflated by near-duplicate frames ❌

-

Solution: Built metric mask from covisibility test; scored only rays hitting time-compatible voxels. ✅

-

Result: Moving objects don't create "ghost penalties"; metrics reflect true reconstruction quality.

-

-

Depth+Pose Flow Limits → Renderer Motion Vectors

-

Challenge: Egoflow (Depth+Pose) confuses camera vs. object motion, ignores deformation. ❌

-

Solution: Planning to use UE's Velocity/Motion Vector pass for per-pixel motion with validity mask. ✅

-

Result: (Further experiment) Flow targets will remain valid when many pixels move or actors deform/occlude.

-

Outcomes

-

Working planning stack: AirSim LiDAR relayed to EGO-Planner v2 yields smooth, collision-aware camera paths with live ESDF/trajectory validation in RViz.

-

Deterministic bring-up: Documented UE/AirSim configs, ROS launch order, and topic remaps provide one-command startup on fresh machines.

-

Reproducible capture set: For each route, the system records synchronized RGB / Depth / LiDAR / Poses (+ intrinsics/extrinsics) with fixed naming and timestamps.

-

Novel-view readiness: Covisibility-filtered pose sampling prunes near-duplicates so evaluation truly tests extrapolation, not frame adjacency.

-

Dynamic-scene stress tests: Scripted start/stop actors inject occlusions and motion changes to pressure-test planning and view synthesis.

-

Flow GT roadmap: Motion Vector export plan (UE) in place to generate per-pixel optical flow ground truth for highly dynamic outdoor scenes.

-

Artifacts & manifests: Auto-generated route manifests (waypoints, velocities, timing) and per-sequence metadata enable quick re-runs and cross-machine portability.

bottom of page